Generative Artificial Intelligence (Gen AI) is the fourth wave of technology, following from the mainframe, personal computer (PC) internet and mobile waves. These prior waves of innovation have transformed industries from retail to media and ecommerce and now Gen AI has the potential to transform a broader range of industries – underscoring the critical need for responsible deployment amid challenges and risks. This was the subject of a recent event hosted by responsible AI experts, including Michelle Dunstan, Sarah de Lagarde, Alison Porter and Antony Marsden.

What is AI?

Before we can delve into the topic of responsible AI, it’s important to distinguish Gen AI from human intelligence and Artificial General Intelligence (AGI), which is when models become as or more intelligent than humans. Gen AI has the capability to generate new and original content with what are known as ‘transformer models’ – a type of AI model that generates human-like text by analysing patterns in large amounts of data. These models, which use an ever-increasing range of parameters (Open AI’s Chat GPT-4 has over a trillion), help algorithms learn, react, create and predict using historic data and interactions.

The rise of AI

Why are we suddenly hearing so much about AI? It has become a buzzword for everything, but why now? While AI has been a mainstay in science fiction novels and films for decades recent product innovation has made its application a reality.

As prior technology waves were catalysed by rapid cost reduction to make them more accessible and scalable, so too has this recent wave of Gen AI. For example, consider that the flash memory of the Apple iPhone 16 (128 GB) would have cost over US$5 million back in the 1990s. A combination of the rapid progress in transformer models enabling natural language processing – combined with accelerated compute power offered by Nvidia’s graphic processing units (GPUs) has lowered the cost of compute to the point of democratising access and expanding use cases. These advancements have made AI a reality – enabling consumers and corporations to access and adopt technologies like ChatGPT and other large language models (LLMs) for a wide range of use cases more easily and affordably.

Waves of AI innovation

Unlike narrow investment themes, waves of innovation require investment across all layers of technology from the silicon through to infrastructure, devices, software and applications. This is why these waves tend to play out over long multi-year periods.

Each of these waves has created deeper and broader disruption with a widening of applications and use cases. Office applications, communication and ecommerce were all disrupted in the PC internet era, with media, entertainment, and transport impacted in the mobile cloud era.

As we enter this fourth wave, more sectors, including healthcare and transportation, are being disrupted and transformed. We can see parallels with the social, environmental, and regulatory challenges of past waves in this latest one too. Each prior wave similarly faced concerns over inappropriate use, privacy and their impact on the electrical grid.

Despite these challenges, in past waves we have seen increasing levels of technology adoption across more industries, as innovation aims to harness the power of new technologies to address critical challenges such as geopolitics, demographics, and supply chain vulnerabilities, as well as enhancing human productivity and collaboration.

Figure 1: Waves of AI innovation

Source: Citi Research, Janus Henderson Investors

Note: References made to individual securities do not constitute a recommendation to buy, sell or hold any security, investment strategy or market sector, and should not be assumed to be profitable. Janus Henderson Investors, its affiliated advisor, or its employees, may have a position in the securities mentioned. There is no guarantee that past trends will continue, or forecasts will be realised. The views are subject to change without notice.

Deploying AI responsibly

When considering responsible AI, it is crucial to start with the understanding that AI is merely a technology, inherently neither good nor bad. It is poised to have a wide range of both positive and negative impacts on humans, which can often be seen as two sides of the same coin. For instance, in the healthcare sector, AI holds the potential to significantly advance early disease detection. However, if the resulting data is not adequately protected, it might subsequently make obtaining health insurance more challenging. Similarly, while AI promises to enhance the workplace by eliminating tedious tasks, there are legitimate concerns about its capacity to displace entire careers.

From a climate perspective, AI applications have enormous potential to address some of the causes of climate change, yet the current expansion of the infrastructure is ironically contributing to an increase in carbon emissions in the short term.

In past compute waves concern over the internet and mobile cloud being enabled by fossil fuels were fuelled by analysts in the power sector extrapolating that the current use of power and carbon intensity would continue with rising demand. However, these forecasts failed to consider how efficiency gains of these new technologies might reduce power consumption. Further, while the rising demand for data centres is driving increased energy demand, this is being partially offset by significant investment in new silicon, storage and networking technologies that will bend this energy demand curve and reduce potential future emissions.

In addition to these new technologies, we continue to see major hyperscalers explore alternative sources of energy. For example, Microsoft recently announced a deal with Constellation Energy concerning the recommissioning of an 835 megawatt (MW) nuclear reactor at the Three Mile Island site in Pennslyvania.1

This deal highlights the sheer scale of efforts being made to meet the growing power needs of AI and the increasing use of nuclear as a renewable source. This move is part of Microsoft’s broader commitment to its decarbonisation path, demonstrating how corporate power demands are intersecting with sustainable energy solutions.

AI is a domain filled with nuances and grey areas. Among the key risks to consider is the dual-use phenomenon. This was exemplified by a group of researchers who demonstrated that an AI-driven drug discovery platform, initially designed to find beneficial drugs, could be easily modified to develop harmful substances or bioweapons.

The misuse of Gen AI tools presents another significant concern. Early examples include Microsoft’s chatbot, Tay, which was manipulated into producing hate speech shortly after its release. This issue extends into serious realms such as cybercrime, highlighting the importance of conducting safety audits and engaging in red teaming – testing tools by attempting to break them before public release. For example, OpenAI’s extensive audit of its latest model, which will supplant its GPT-4 system, is a testament to the critical need for such precautions.

AI alignment

Aligning AI with the common good is an ongoing challenge, as illustrated by Swedish philosopher Nick Bostrom’s paperclip maximiser thought experiment, which examines the ‘control problem’: how can humans regulate a super-intelligent AI that is smarter than us. This underscores the necessity of ongoing focus from AI safety researchers, which aim to prevent unwanted behaviour from AI systems, on ensuring AI’s objectives align with human values and societal benefits.

Further, the occurrence of errors and hallucinations in AI-generated content, exemplified by the Air Canada chatbot incident, whereby incorrect information was given to a traveller, points to the need for greater transparency in AI decision-making processes. The issues of bias and the lack of transparency further complicate the responsible deployment of AI technologies, demanding concerted efforts to elucidate how AI models arrive at their outputs.

Ultimately, navigating the path toward responsible AI involves acknowledging its dual potential, addressing key risks through rigorous safety measures, ensuring alignment with societal values, and enhancing transparency and accountability in AI systems.

AI – how worried should we be?

The topic of existential risk associated with AGI, which will evolve out of the current wave of Gen AI after human intelligence is achieved, remains a hot topic of discussion. Concerns on AGI are often encapsulated by a term used in Silicon Valley known as “p doom,” which refers to the probability that AI might surpass humanity and potentially enslave us. This concept raises intriguing theoretical questions. The so-called godfathers of AI, three researchers who have been awarded the Turing Prize for their contributions, display a broad spectrum of opinions on this matter.

Yann LeCun, who is involved in AI research at Meta, adopts a relatively dismissive stance towards the existential risk of AGI, comparing the intelligence of current AI systems to that of a cat and dismissing concerns over existential risks as nonsensical. On the other hand, Geoffrey Hinton, a well-known figure in the AI community, left Google to vocalise his concerns about the rapid advancement of AI, fearing it could eventually outsmart humanity. Yoshua Bengio, another prominent researcher, has issued warnings about the potential catastrophe that could ensue if AI is not regulated in the near future. Given their proximity to AI research and development, their diverse perspectives warrant serious consideration.

Concentration of power

However, some argue that focusing on these long-term existential risks of AGI distracts from more immediate concerns. Observing the developments within major tech companies over the past two years, particularly regarding their ethics boards and safety teams dedicated to responsible AI, reveals significant changes. There have been instances of AI ethics bodies being dismantled, teams disbanded and whistleblowers raising alarms about the dangers of AI, fuelled by the competitive drive for innovation within these companies (Figure 2).

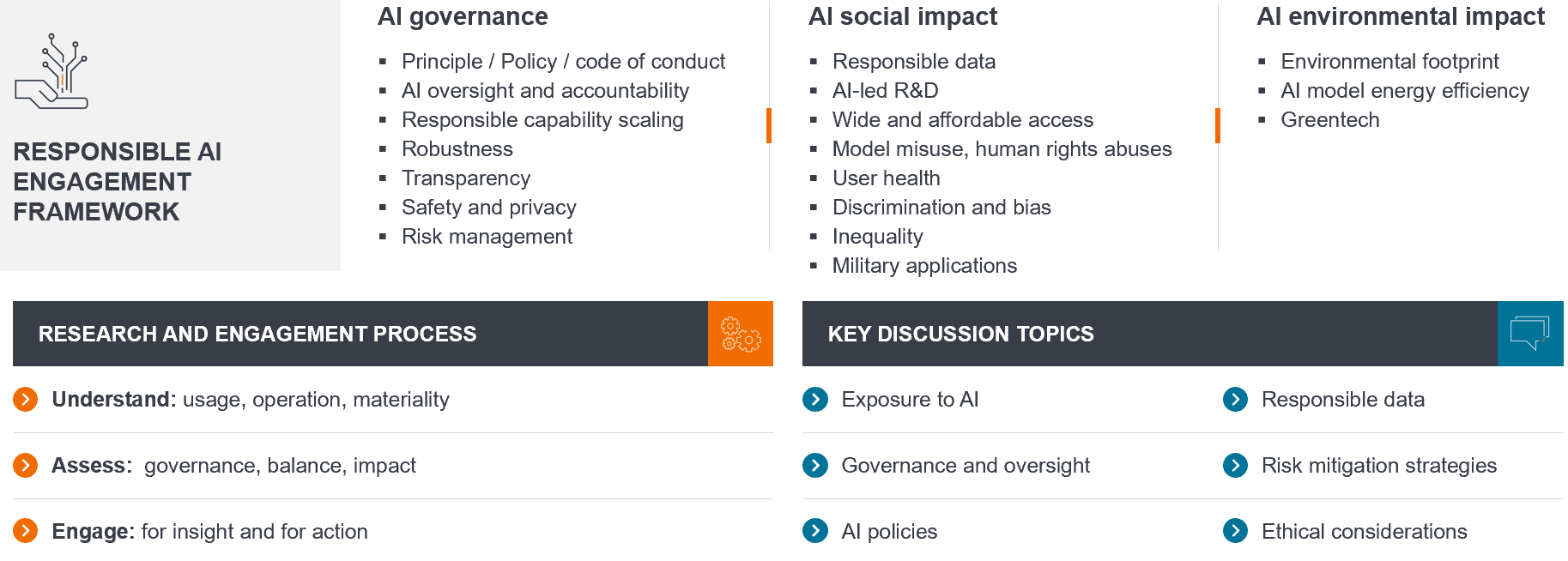

Figure 2: Responsible AI should be a priority for all companies

Source: Janus Henderson Investors

However, we would note that through our engagements with these companies, it has become apparent that AI has evolved from a theoretical aspect of business to becoming a central component of their entire business model, necessitating the integration and centralisation of risk functions. This shift from theoretical to practical concerns has led to an increase in resources devoted to safety issues within many companies where a more holistic approach to AI is manifesting in the merger and integration of bodies internally.

One of the issues that concerns us the most is the concentration of power within the realm of AI. This is not a new issue, as some of the largest technology companies have long been characterised by their dominant market positions, unique shareholding structures, and the immense influence wielded by a small number of individuals controlling this technology and its future direction. This concentration of power is a significant concern that merits attention.

Regulating innovation – striking the balance

Governments around the world are currently grappling with the rapid pace of change in AI, making it challenging to keep up with regulatory needs. At the country level, many governments have primarily adopted principles-based forms of regulation. China stands out for having already implemented quite stringent regulations, accompanied by severe penalties. By 2025 or 2026, numerous countries are expected to introduce much stricter regulations. The European Union (EU) has taken a significant step with the EU AI Act, one of the more well-known pieces of AI legislation, which has already come into effect. Its impact is anticipated to become more apparent in the US over the next year or two.

In the US, there is currently no federal regulation governing AI, though there have been lively debates around potential new regulations, such as those proposed in California, which were ultimately vetoed. This veto was influenced by concerns that such regulation could stifle innovation, despite significant public support for it. However, at the state level, regulations are beginning to emerge. For example, New York has introduced regulations concerning recruitment, bias and the use of AI, while various other states have started to implement their own measures. In California, regulations set to take effect next year will require content such as images created by AI to be watermarked, enabling detection.

While many countries are considering the implementation of AI regulations, there is also a strong desire to become hubs for AI innovation. This creates a competitive dynamic and presents a real trade-off, sparking active debates, particularly in the EU, about how to balance regulation with the promotion of innovation in the field of AI.

Navigating the waves of AI innovation

The journey through the evolving landscape of AI reveals a technology filled with potential to revolutionise every sector of society, from healthcare to transportation, while also posing significant challenges and ethical dilemmas. However, with great opportunity comes great responsibility.

The immediate concerns on Gen AI surrounding the concentration of power and the necessity for effective regulation, highlight the complex terrain we must traverse. The actions taken by governments, the tech industry, and the global community in the coming years will be crucial in shaping the future of AI but will evolve differently in different regions based on their past – the political, cultural, and regulatory foundations put in place from prior compute waves. Regulatory developments are complex and must balance the drive for innovation with the imperative to safeguard ethical standards and societal well-being.

As we stand on the precipice of this technological frontier, the path forward requires a collaborative effort among all stakeholders to harness the transformative power of AI, driving for transparency, standards and frameworks to allow us all to monitor, identify and remedy challenges that will inevitably arise from the evolving use cases of AI. In doing so, we can ensure that AI serves as a force for good, enhancing human capabilities and fostering a more equitable and sustainable future for all.