Strong fundamentals, improving market dynamics, and rising investor demand for diversification position real estate for a re-rating in 2026.

Insights

Our latest thinking on the themes shaping today’s investment landscape. Explore timely updates, quarterly features and in-depth analysis straight from our experts.

The election outcome therefore does not change already improving economic fundamentals for Costa Rica; rather, it makes the positive credit story more credible.

Discover why portfolio structure, not bubble timing, determines resilience. Learn how equity income strategies help investors capture AI-driven growth while reducing volatility and protecting capital during downturns.

Today’s ABS structures provide better transparency and investor protections.

Discussion on value opportunities in credit, collateralised loan obligations and mortgages, and why really understanding each credit will be pivotal in 2026.

A discussion on concerns that AI-driven automation, agentic tools, and vibe coding could disrupt traditional software SaaS business models.

Despite strong early-2026 gains, markets continue to underestimate the scale and longevity of Europe’s defense spending cycle.

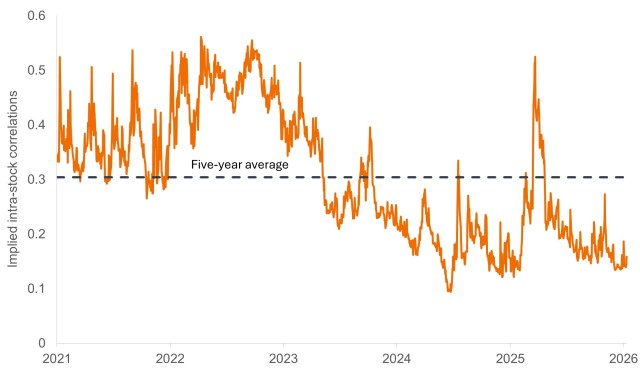

We view the lack of systemic risk priced into the market as the culprit for correlations among U.S. equities being near historic lows.

A monthly market update featuring global equity and fixed income performance, sector and asset class trends, and key themes shaping the investment landscape.

Why active management, fundamental research, and selectivity across sectors are key to identifying opportunities while managing volatility in fixed income.

Where to find climate adaptation investment opportunities in an era of rising physical risks.