AI – Is the juice worth the squeeze?

Power availability is the biggest challenge for AI growth. Portfolio Manager Hamish Chamberlayne discusses how innovation seeks to meet AI's energy needs but highlights unresolved issues regarding its energy sources and the technology’s potential impact on climate.

13 minute read

Key takeaways:

- The intersection of artificial intelligence (AI) and the energy sector is revealing significant challenges and opportunities, particularly in the context of sustainability and the potential physical limitations to the technology’s growth.

- While significant advancements in computing efficiency are an enabling factor in AI’s growth, these gains do not necessarily equate to a reduction in the technology’s overall energy consumption.

- For investors, the evolving dynamics between AI and the energy sector poses opportunities and challenges, with significant investment in clean energy required to meet AI power demand sustainably.

The power demands of artificial intelligence (AI), combined with the impacts of reindustrialisation, (EVs), and the transition to renewable energy, sees the technology represent a significant investment opportunity across the entire value chain, including data centre and grid infrastructure, as well as electrification end markets.

However, it is important to always consider and question potential risks, particularly the physical limitations on AI’s growth, where its insatiable thirst for energy will come from, and how its associated emissions may fuel new climate concerns.

What is enabling AI?

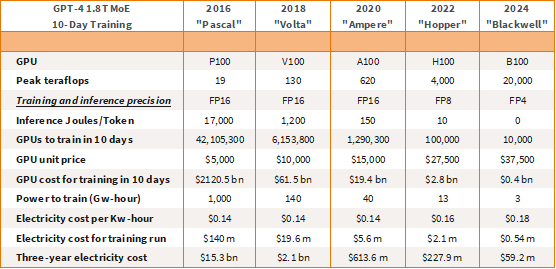

The advancement of AI is primarily enabled by US-based chipmaker Nvidia’s increasingly efficient graphic processing units (GPUs). Figure 1 illustrates the evolution of its GPUs, showcasing the efficiency gains, depicted in terms of teraflops per GPU, per watt, across models from Pascal (2016) to its latest iteration Blackwell (2024).

Currently, to train OpenAI’s ChatGPT-4 in just ten days, one would need 10,000 Blackwell GPUs costing roughly US$400 million. In contrast, as little as six years ago, training such a large language model (LLM) would have required millions of the older type of GPUs to do the same job. In fact, it would have required over six million Volta GPUs at a cost of US$61.5 billion – making it prohibitively expensive. This differential underscores not only the substantial cost associated with Blackwell’s predecessors but also the enormous energy requirements for training LLMs like ChatGPT-4.

Figure 1: The cost curve

Source: NVIDIA, the Next Platform, epochai.org

Previously, the energy cost alone for training such an LLM could reach as much as US$140 million, rendering the process economically unviable. The significant leap in the computing efficiency of these chips, particularly in terms of power efficiency, however; has now made it economically feasible to train LLMs.

This point is illustrated in Figure 1 under the metric ‘inference joules/token’, which is used to measure the energy efficiency of processing natural language tasks, particularly in the context of LLMs like those used for generating or understanding text (e.g., chatbots, translation systems). Here we can see a 25x improvement in efficiency from Nvidia’s Hopper (10) to its Blackwell (0.4) successor.

The sting in the tail

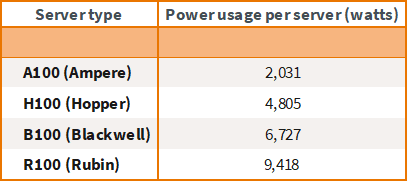

Nvidia’s innovations in the power efficiency of its chips have indeed enabled advancements in AI. However, there is a significant caveat to consider. Although we often assess the cost-effectiveness of these chips in terms of computing power per unit of energy – Floating Point Operations Per Second (FLOPS) per watt – it’s important to note that the newer chips come with a higher power rating (Figure 2). This means that, in absolute terms, these new chips consume more power than their predecessors.

Figure 2: Nvidia GPU power ratings

Source: Morgan Stanley research

Note: Power usage per server (assuming four chips per server).

Couple this with Nvidia’s strong sales growth, indicating an incredible demand for computing power from companies like Alphabet (Google), OpenAI, Microsoft and Meta, driven by the ever-increasing size of datasets to develop AI technologies, the power implications of AI’s rapid expansion have begun to raise eyebrows.

Interestingly, thanks to efficiency improvements, global data centre power consumption has remained relatively constant over the past decade, despite a massive twelvefold increase in internet traffic and an eightfold rise in datacentre workloads.1 An International Energy Agency (IEA) report highlighted how data centres consumed an estimated 460 terawatt-hours (TWh) in 2022, representing roughly 2% of global energy demand,2 which was largely the same level as it was in 2010.

But, with the advent of AI and its thirst for energy, data centre energy consumption is set to surge. In fact, the IEA estimates that data centres’ total electricity consumption could more than double to reach over 1,000 TWh in 2026 – roughly equivalent to the electricity consumption of Japan.3 This highlights how demand for AI is creating a paradigm shift in power demand growth. Since the Global Financial Crisis (GFC), demand for electricity in the US has witnessed a flat 1% bump annually – until recently.4 Driven by AI, increasing manufacturing/industrial production and broader electrification trends, US electricity demand is expected to grow 2.4% annually.5 Further, based on analysis of available disclosures from technology companies, public data centre providers and utilities, and data from the Environmental Investigation Agency, Barclays Research estimates that data centres account for 3.5% of US electricity consumption today, and data centre electricity use could be above 5.5% in 2027 and more than 9% by 2030.6

The innovation, efficiency, and sustainability paradox

This paradigm shift introduces the concept of the Jevons Paradox, which has implications for energy consumption and environmental sustainability. It suggests that simply improving the efficiency of resource use is not enough to reduce total resource consumption.

The paradox is named after William Stanley Jevons, an English economist who first noted this phenomenon in the 19th century during the Industrial Revolution. In his 1865 book “The Coal Question,” Jevons observed that technological improvements in steam engines made them more efficient in using coal. However, instead of leading to a reduction in the amount of coal used, these efficiencies led to a broader range of applications for steam power. As a result, the overall consumption of coal increased dramatically.

This paradox appears to be equally applicable today as we stand at the threshold of a new AI powered industrial revolution. As innovation by chipmakers drives a rapid rise in the computational power and efficiency of chips, the potential productivity benefits of AI across various industries is resulting in even greater demand for the technology, which in turn is leading to an increase in energy consumption despite these efficiency improvements.

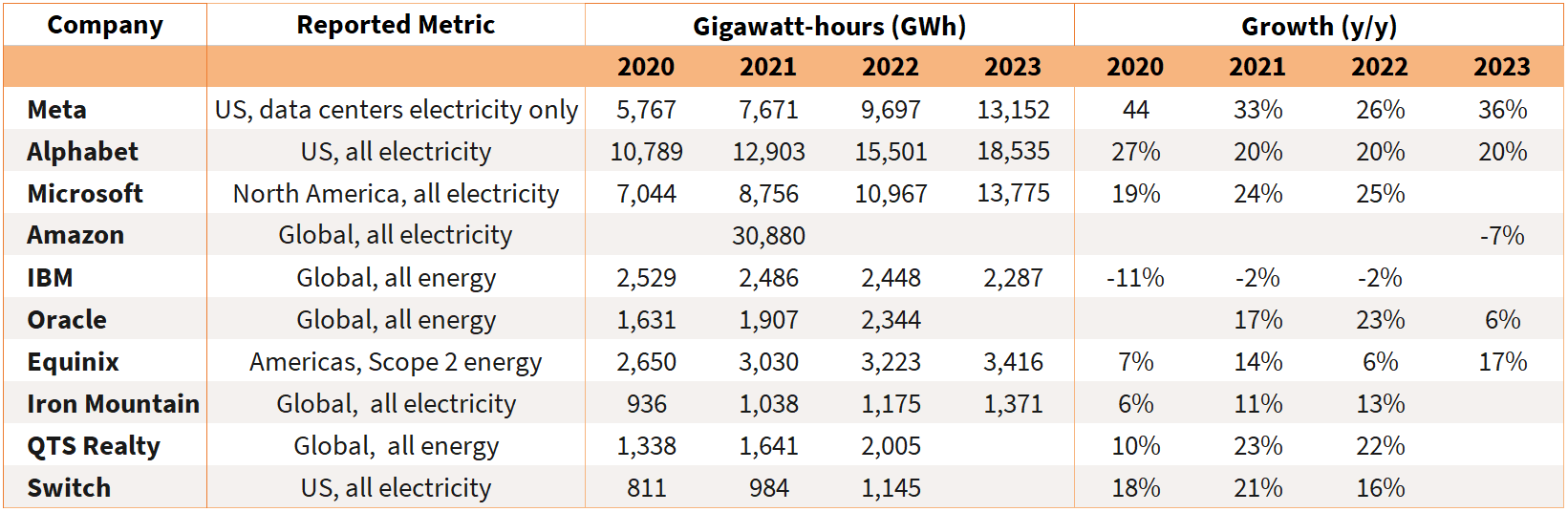

To put this into context, in theory, transitioning from a Hopper to a Blackwell-powered data centre should result in a fourfold reduction in power consumption. However, as depicted by the data in Figure 3, the opposite is true, because large AI companies and hyperscalers are instead maximising the use of these more powerful, efficient chips. This has resulted in the number of GPUs within a data centre increasing, underscoring the essence of the Jevons Paradox whereby increased efficiency leads to greater overall consumption due to expanded use.

Figure 3: Data centre provider energy and electricity use

Source: Company reports and Barclays Research

Critical questions

A historical barrier to AI development has been energy costs. Considering prevailing trends and their power implications, a crucial question arises: Where is this additional power going to come from and what are the implications for emissions?

In exploring this issue, three key areas emerge as focal points:

- Electrification value chain

The power demands of AI, combined with the impacts of reindustrialisation, EVs, and the shift to renewable energy, is creating strong market conditions for companies exposed to electrification. This is exemplified by power companies Vistra and Constellation Energy, which were among the best-performing US stocks year-to-date, rising more than 282% and 105% respectively at the time of publication.7

- Physical constraints

The potential physical constraints to AI’s growth must also be examined. This encompasses not only the limitations of current technology and infrastructure but also the availability of resources needed to sustain the rapidly rising power demands of the technology.

- Emissions profile

To address these issues big hyperscalers have restated their commitments to decarbonisation pathways and some have turned towards nuclear energy as a solution, but this brings its own environmental considerations. We must consider the emissions profile of AI and the broader environmental impact of increased power consumption. This includes not just the immediate emissions from power generation but also its long-term sustainability. The emissions profiles of big tech companies have barely declined over the last several years, with the rise of AI creating even larger energy demands. According to research by AI startup Hugging Face and Carnegie Mellon University, using generative AI to create a single image takes as much energy as full charging a smartphone.8

Nuclear energy to fuel AI power demand

To illustrate the real-world implications of AI’s increasing power demands, Microsoft recently announced a deal with Constellation Energy concerning the recommissioning of an 835 megawatt (MW) nuclear reactor at the Three Mile Island site in Pennyslyvania.9

This deal highlights the sheer scale of efforts being made to meet the growing power needs of AI. The move is part of Microsoft’s broader commitment to its decarbonisation path, demonstrating how corporate power demands are intersecting with sustainable energy solutions.

The cost of recommissioning the nuclear reactor is estimated at US$1.6 billion, with a projected timeline of three years for the reactor to become operational, with Microsoft targeting a 2028 completion date.

In October, Alphabet finalised an order for seven small modular reactors (SMRs) from California-based Kairos Power to provide a low-carbon solution to power its data centres amid rising demand growth for AI and cloud storage. The first of these SMRs is due to be completed by 2030 with the remainder scheduled to go live by 2035.10 Amazon also announced that it has signed three new agreements to support the development of nuclear energy projects – including the construction of several new SMRs to address growing energy demands.11

These initiatives underscore the significant investments and timeframes involved in securing the additional power capacities required to support the escalating energy demands of modern computing and AI technologies. Further initiatives are expected given the incremental demand for data centre capacity in the US market is projected to grow by 10% per year for the next five years.12 This growth could result in data centres potentially representing up to 10% of the total US energy supply by 2030, which is significant given that Rystad Energy forecasts that total US power demand will grow by 175 TWh between 2023 and 2030, bringing the country’s demand close to 4,500 TWh.13

While Microsoft is committing to nuclear power, a carbon-free source of electricity as part of its decarbonisation efforts, we remain cautious and concerned about how the energy gap required to support this growth in AI and data centres will be filled.

Powering the future

Earlier this year, we engaged with Microsoft on its increase in emissions and commitment to renewable energy sourcing for data centres. In August, we were pleased to see Microsoft address concerns regarding the increasing energy requirements of AI and the resulting shift towards sustainable practices across the industry during its presentation to the Australian Senate Select Committee on Adopting AI.14

The hyperscaler acknowledged that AI models and related services require a lot more power than traditional cloud services and was a key issue the industry needed to address. Microsoft also stated that it remained on course to achieve its 2030 net zero and water-positive targets in its sustainability strategy. While the increased power demands were unknown in 2020 when the targets were set, the use of renewable and nuclear energy should enable the commitment to be sustainably met.

Microsoft Founder Bill Gates has urged global policymakers to refrain from going “overboard” with regards their concerns about AI’s energy footprint, noting that the technology will likely play a decisive role in achieving net zero ambitions by reducing global demand.

AI and the energy transition

Advancements in AI, when paired with innovations in renewables, may hold the key to sustainably meeting rising energy demand. The IEA reported that power sector investment in solar photovoltaic (PV) technology is projected to exceed US$500 billion in 2024, surpassing all other generation sources combined.15 By integrating AI into various solar energy applications, such as using technology to analyse meteorological data to produce more accurate weather forecasts, intermittent energy supply can be mitigated.16 Researchers are also relying on AI to accelerate innovation in energy storage systems, given existing conventional lithium batteries are unable to fulfil efficiency and capacity requirements.17 While AI will create additional demand for energy, it also has the potential to solve challenges related to the net zero transition.

In April, the US Department of Energy (DoE) released a report outlining how AI will likely play a vital role in accelerating the development of a 100% clean electricity system.18

Significant opportunities in the following areas were outlined:

- Better grid planning: Employing detailed climate data from the National Renewable Energy Laboratory, combined with sophisticated generative machine learning techniques, to better integrate fluctuating renewable energy sources.

- Boosting grid resilience: The capacity of AI to swiftly analyse vast data sets and identify intricate patterns can help operators of the electrical grid quickly identify issues and react to, or prevent, interruptions in power supply.

- Identifying innovative materials: Accelerating the discovery of new materials is essential for clean energy technologies, including batteries that require less lithium, new materials that are effective in solar energy, or enhanced catalysts for increasing hydrogen production.

Beyond the grid, AI has the potential to play a significant role in supporting a variety of applications that can contribute to the development of a fair, clean energy economy, the DoE noted. Achieving a net zero greenhouse gas (GHG) emissions target throughout the economy involves overcoming distinct challenges in various sectors, such as transportation, buildings, industry, and agriculture.

We are witnessing signs of growing demand for AI across various sectors including healthcare, transportation, finance, and industry, and we anticipate this to be a sustained, long-term trend.

As a team we have benefited from involvement in AI and the wider movements towards electrification and reindustrialisation. However, we are conscious of the potential increase in carbon emissions due to AI’s expansion and are closely monitoring the decarbonisation pledges of companies like Nvidia and Microsoft. While we anticipate a short-term rise in emissions, we are optimistic that AI will ultimately contribute positively to decarbonisation efforts through innovation and productivity enhancements and are confident that the heightened demand for power will be addressed by increased investment in clean energy.

Despite this being a year marked by significant political changes worldwide, our outlook for investing in sustainable equities remains positive. Inflationary pressures are easing, and monetary policy appears to be taking a more supportive direction. Regardless of the political landscape, the fundamental trends we are focused on are continuing to advance and develop.

IMPORTANT INFORMATION

References made to individual securities do not constitute a recommendation to buy, sell or hold any security, investment strategy or market sector, and should not be assumed to be profitable. Janus Henderson Investors, its affiliated advisor, or its employees, may have a position in the securities mentioned.

There is no guarantee that past trends will continue, or forecasts will be realised.

Past performance does not predict future returns.

Sustainable or Environmental, Social and Governance (ESG) investing considers factors beyond traditional financial analysis. This may limit available investments and cause performance and exposures to differ from, and potentially be more concentrated in certain areas than the broader market.

1International Energy Agency, ‘Global trends in internet traffic, data centre workloads and data centre energy use, 2010-2019 (last updated 3 June 2020)

2International Energy Agency, ‘Electricity 2024: Analysis and forecast to 2026’

3International Energy Agency, ‘Electricity 2024: Analysis and forecast to 2026’

4University of Wisconsin-Madison, ‘The Hidden Cost of AI’ by Aaron R. Conklin (21 August 2024)

5Goldman Sachs, ‘Generational growth: AI, data centres and the coming US power demand surge’ (28 April 2024)

6Barclays Research, ‘Artificial Intelligence is hungry for power’ (28 August 2024)

7Google Finance, Market summary for Vistra, Constellation Energy (12 November 2024)

8MIT Technology Review, ‘AI’s carbon footprint is bigger than you think’ (5 December 2023)

9Constellation Energy, press release (20 September 2024)

10Kairos Power, Press release (14 October 2024)

11Amazon, press release (16 October 2024)

12McKinsey, ‘Investing in the rising data centre economy’ (17 January 2023)

13Rystad Energy, ‘Data centers and EV expansion create around 300 TWh increase in US electricity demand by 2030’ (25 June 2024)

14ARNnet, ‘Microsoft A/NZ acknowledges local energy usage increase due to AI’ (August 2024)

15International Energy Agency, World Energy Investment 2024 Report

16World Economic Forum, ‘Sun, sensors and silicon: How AI is revolutionizing solar farms’ (2 August 2024)15Source: ERGO Group, ‘How AI is helping with battery development’ (20 August 2024)

17Dean H. Barrett and Aderemi Haruna, Molecular Sciences Institute, School of Chemistry, University of the Witwatersrand, ‘Artificial intelligence and machine learning for targeted energy storage solutions’

18Source: US Department of Energy, AI for Energy: Opportunities for a Modern Grid and Clean Energy Economy (April 2024)

FLOPS: Stands for Floating Point Operations Per Second (FLOPS), and it’s a measure of a computer’s performance, especially in fields that require a large number of floating-point calculations. Higher FLOPS indicate more calculations can be done per second, which is particularly relevant for training and running complex machine learning models.

FLOPS/Watt is a measure of computational efficiency, indicating how many floating-point operations a system can perform per unit of power consumed. The higher the FLOPS/Watt, the more energy-efficient the system is.

Inference: Within the context of this article, this refers to the process of using a trained model to make predictions or decisions based on new, unseen data. In the context of language models, inference would involve tasks like generating text responses, translating languages, or answering questions.

Joule: A unit of energy in the International System of Units. It’s a measure of the amount of work done, or energy transferred, when applying one newton of force over a displacement of one meter, or one second of passing an electric current of one ampere through a resistance of one ohm.

Monetary policy: The policies of a central bank, aimed at influencing the level of inflation and growth in an economy. Monetary policy tools include setting interest rates and controlling the supply of money. Monetary stimulus refers to a central bank increasing the supply of money and lowering borrowing costs. Monetary tightening refers to central bank activity aimed at curbing inflation and slowing down growth in the economy by raising interest rates and reducing the supply of money. See also fiscal policy.

Net zero: A state in which greenhouse gases, such as Carbon Dioxide (C02) that contribute to global warming, going into the atmosphere are balanced by their removal out of the atmosphere.

Per token: In natural language processing (NLP), a “token” typically refers to a piece of text, which could be a word, part of a word, or even a character, depending on the granularity of the model. Thus, “per token” means that the energy usage is being measured with respect to each individual piece of text processed by the model.

Watt: A unit of power in the International System of Units, representing the rate of energy transfer of one joule per second.

All opinions and estimates in this information are subject to change without notice and are the views of the author at the time of publication. Janus Henderson is not under any obligation to update this information to the extent that it is or becomes out of date or incorrect. The information herein shall not in any way constitute advice or an invitation to invest. It is solely for information purposes and subject to change without notice. This information does not purport to be a comprehensive statement or description of any markets or securities referred to within. Any references to individual securities do not constitute a securities recommendation. Past performance is not indicative of future performance. The value of an investment and the income from it can fall as well as rise and you may not get back the amount originally invested.

Whilst Janus Henderson believe that the information is correct at the date of publication, no warranty or representation is given to this effect and no responsibility can be accepted by Janus Henderson to any end users for any action taken on the basis of this information.